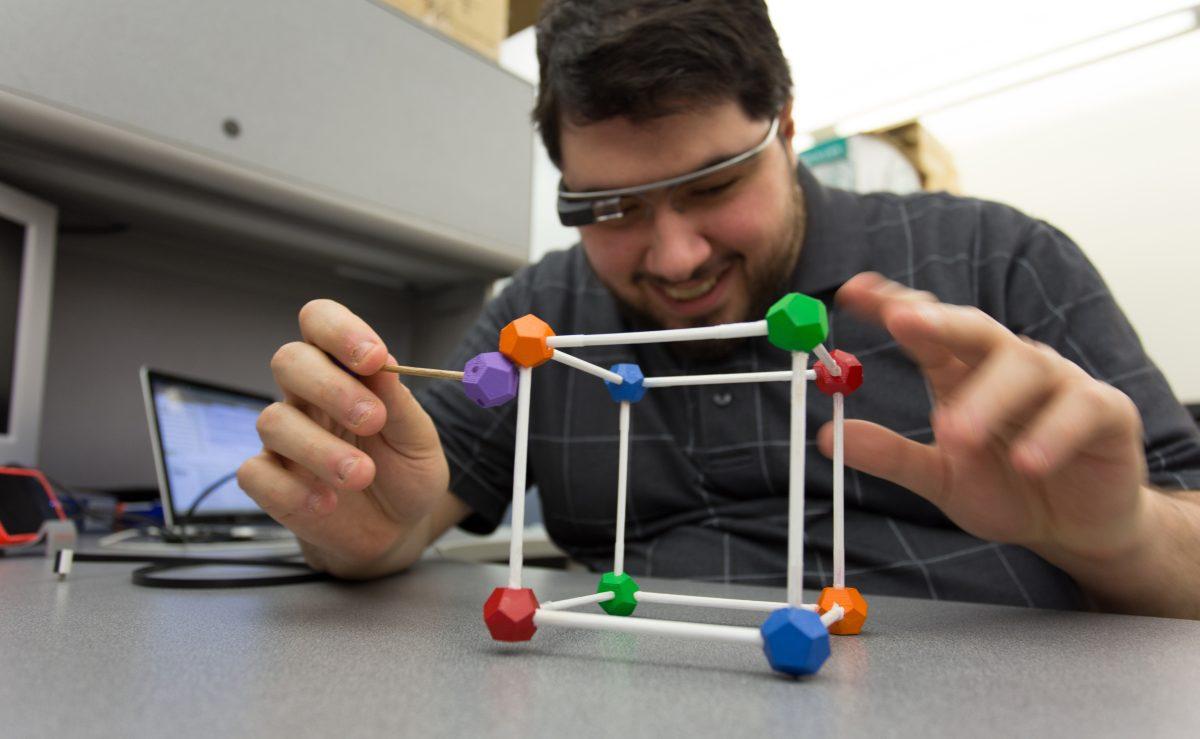

Google Glass has come to N.C. State’s library. Sina Bahram and Arpan Chakraborty, computer science graduate students, were the first to witness the possibilities and expectations of Glass.

Google Glass is a wearable computer with an optical head-mounted display. Priced at $1,500, Glass is only available to consumers who are participating in the Google Glass Explorer Program.

“What we want to do and said we would accomplish because we didn’t have Glass for more than a week, was to come up with a framework,” Bahram said. “We wanted a framework for folks to do programming that would make things cooler and easier.”

Bahram and Chakraborty are part of a lab group within the computer science department called the Knowledge Discovery Lab.

Chakraborty said this lab concentrates on human-computer interaction applications, as well as accessibility within the domain. They write applications and create tools with a strong attention for people with different levels of visual impairment, according to Chakraborty.

“We’re focused on how we can help them,” Chakraborty said.

According to Bahram, one of the first applications the lab developed was a talking compass application, which was written by Chakraborty. Bahram said it was a straightforward process in which it would announce the direction he was facing.

“One of the things with the talking compass application was that it could be used in an accessibility space in a navigational role,” Chakraborty said.

Chakraborty said using Google Glass, in regards to an application like the one he created, could be taken a step further.

“[Google Glass can be used] if you are learning some skill and you need both of your hands for manipulation, but you’re not an expert yet, and there is some expert somewhere else sitting in a different part of the world, you can ask them live for tips and guidelines,” Chakraborty said. “They can potentially see your video screen, look at what you’re looking at and even mark on your display with things that they want you to pay attention to.”

Bahram and Chakraborty both said the placement of information on the Google Glass screen was done in a very thoughtful manner.

“[The information] is not going to distract you from your task,” Chakraborty said. “You can still do normal things while referencing it.”

According to Bahram, the beta Google Glass they worked with seems like only a first step for what Google Glass can become.

“This is an area where you have a lot of explosive growth in the beginning and then it kind of flattens out,” Bahram said. “There’s a lot of initial advancement left to go with Google Glass.”

Chakraborty said he thinks Google itself is not exactly sure how to promote Glass as a usable device.

“They have a page where they say that even though you feel the urge to continuously use it all the time, don’t do that because it is not designed for that,” Chakraborty said. “Their point of view is that it’s meant for short bursts of interaction, which will help you to get back to what you were doing in your real life.”

Bahram and Chakraborty both agreed, from their interaction with Google Glass, the voice-based interactions need to be further developed. According to Chakraborty, Glass still has a long way to go.

“An important thing that I feel needs to be mentioned is that it’s not that easy to use if you’re blind,” Bahram said. “People might think that it’s an odd statement to make, though, because it’s a very visual thing.”

According to Bahram, if Google Glass had access to a program called Talk Back, a screen reader, someone who is visually impaired wouldn’t require the assistance of someone else to help them use it or navigate the screens.

“They could start programming the device…to help them with their everyday life,” Bahram said. “Let’s say they create a color reader or a Google image search for a dollar bill to figure out what currency it is. There are all sorts of applications that you could immediately think of that could be assistive.”

However, the two agreed that with Google Glass being Android based, the platform for such advancements is there.

“They [Google] are promoting the voice-based interactions a lot more,” Chakraborty said. “Although they have a lot of things to sort out, I think they’re going in the right direction.”